Reducing Our Data Infrastructure Costs by 76% by Migrating from Snowflake to Databricks

Background

At What If Media Group, data science and machine learning are the backbone of our business. As a performance marketing company, our data infrastructure empowers us to ingest a vast quantity of first-party user data, generate a wealth of insights from that data, and train models to respond to users’ needs and preferences. Ultimately, the robustness and quality of our data platform directly impact our success and ability to drive powerful experiences for users and customers.

Over the past few years, WIMG has grown significantly. With this growth came new data sources, new modeling frameworks, new requirements for our data architecture, and significant cost increases from our data infrastructure. To continue driving powerful user experiences for our customers, we had to introduce several key capabilities that were not available in our Snowflake-centered, data stack:

1. Process events and make predictions in real-time: Reducing the time between user events and marketing communications is critical to the effectiveness of our campaigns. Snowflake’s immature support for streaming prevented us from achieving response latencies of less than a minute, so we needed to introduce streaming-first technology into our ecosystem.

2. Provide advanced data science and MLOps capabilities out-of-the-box: As our data science team grew, it became increasingly important to provide an environment for our team members to train and experiment with models without having to manually

maintain ML infrastructure. As the number of models we had in production increased, so did the technical debt associated with our legacy data science environment. Our data scientists were spending 20-30% of their time maintaining our MLOps processes and fixing Airflow pipelines associated with model scoring and tracking

3. Adopt a more scalable data architecture: As the number of customers & data sources grew, so did the complexity and cost of our data infrastructure, which was centered around Snowflake. We wanted to adopt a more open data architecture where our data scientists and ML engineers could easily and quickly leverage these data sources without ballooning our costs.

The Challenge with Our Legacy Stack

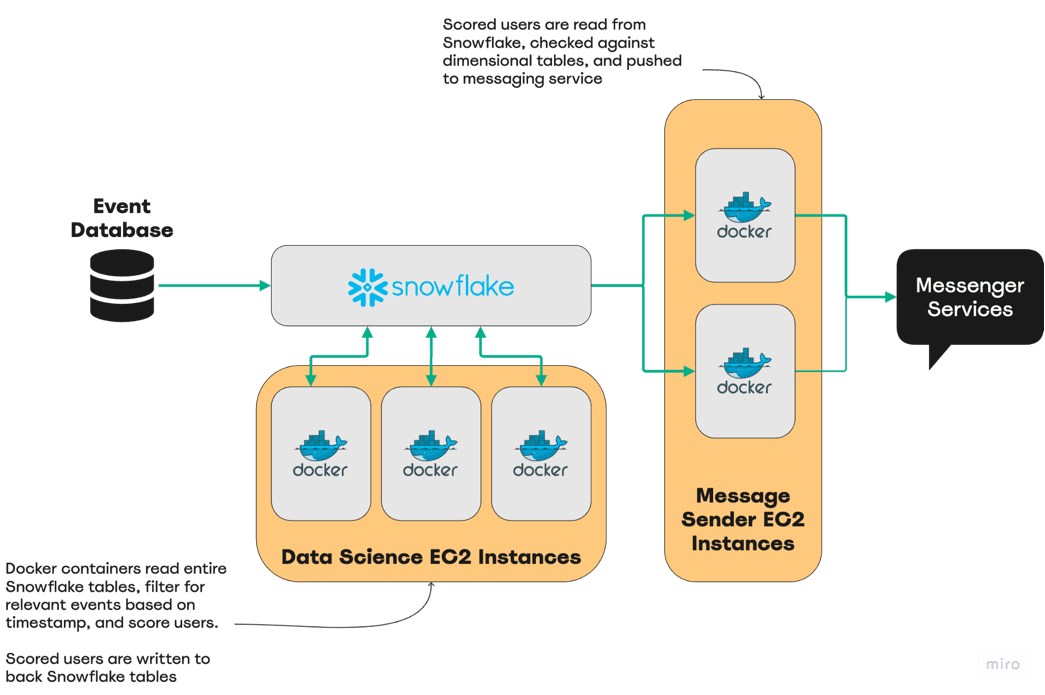

Before Databricks, our previous architecture was centered around Snowflake as our sole data warehouse, where all of the data needed by our Product and AI teams was first ingested into Snowflake tables and then pulled out of Snowflake for model development and user scoring. Our model training and scoring workflows were housed in self-managed docker containers hosted on EC2 instances and orchestrated with Airflow.

To score and respond to user events, we implemented a batch process, in which Docker containers would load tables from Snowflake, filter for applicable users, score those users, and then push marketing recommendations to our messaging system every 2-30 minutes.

Although Snowflake serves its role as a data warehouse very well, there were always challenges using it for our core marketing messaging use case. These challenges became even more critical to resolve as our business and data volumes became larger. In particular:

- Our batch process required us to reload entire event tables every time we wanted to score or send a message, leading to lots of redundant table loads. This caused our Snowflake bill to explode by several million dollars, year over year.

- As our data volumes increased, it became increasingly difficult to process our queries in anything close to real-time. This limited our response latency for certain pipelines that we were developing for our customers.

The Solution: Databricks

When we started to look at alternate solutions, we immediately gravitated toward Databricks – a well-established leader in the data, analytics, and AI space. We wanted to evaluate whether the Databricks Lakehouse Platform could run cost-performant/low-latency streaming workflows, provide out-of-the-box data science and MLOps solutions, and scale efficiently with our growing data volumes.

After validating this via a proof of concept, we embarked on making several key changes to our pipelines:

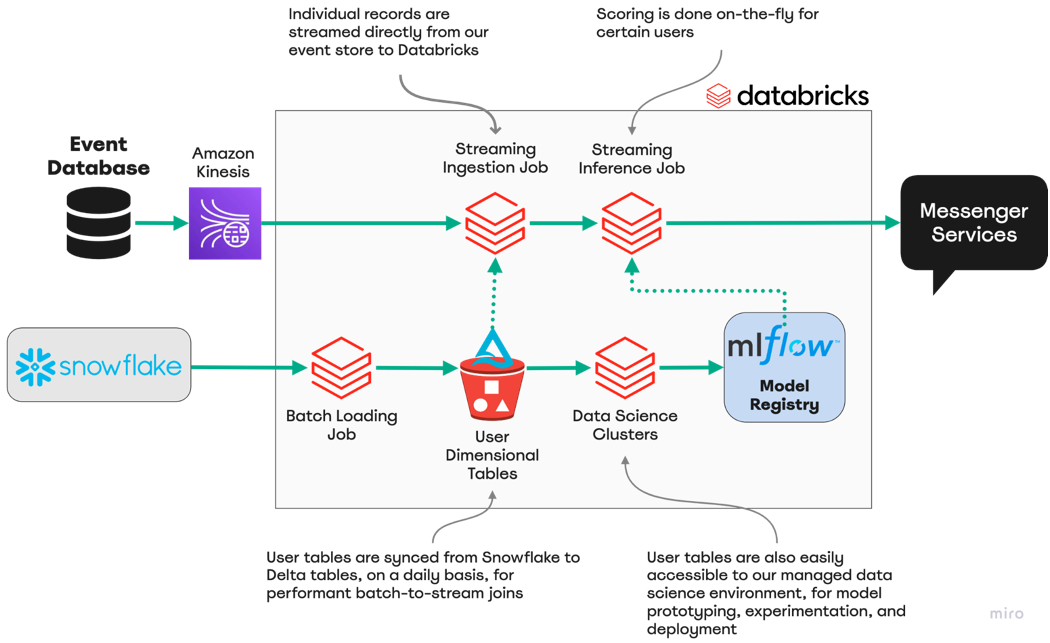

-

- Leveraging Spark Structured Streaming to ingest user events directly from our event system for real-time scoring and delivery to our messaging queue.

- Migrating key user dimensional tables from Snowflake to Databricks for stream-to-batch joining.

- Migrating our data science environment from self-hosted Docker containers to Databricks clusters.

The Results

These efforts yielded immediate benefits for our team in three key areas: cost, performance, and productivity. While not the focus of this initiative, we also have realized significant incremental revenue as a direct result of being able to deliver more direct marketing. This is due to the reduced latency and cost improvements of sending messages.

Cost Savings

By transitioning to a streaming-first architecture on Databricks, we reduced the TCO of our marketing messaging pipelines by an average of 76%. These cost savings were driven by several key capabilities that were unlocked with Databricks but were not available with Snowflake.

By migrating key dimensional tables from Snowflake to Delta and streaming events directly from our event store, we no longer had to reload entire Snowflake tables every 2 minutes when trying to score and push our marketing messages. Instead, we were able to process individual events, joining them to specific dimensional tables as needed and scoring them on-the-fly for specific use cases. Additionally, access to more flexible, compute-optimized resources allowed us to consolidate several streaming workloads onto a single cluster. This significantly reduced the amount of compute required for these pipelines, resulting in major cost saving across our organization.

Taking advantage of Databricks’ flexible compute for our data science and machine learning teams allowed us to deploy clusters on an as-needed basis, as opposed to having an EC2-hosted data science environment run 24/7. This reduced the overhead for our data science teams and decreased the cost of maintaining development environments for exploratory analysis.

Performance Improvements

The new streaming architecture empowered by Databricks also allowed us to respond to user events in real time. Because our legacy model operated on a batch process that required full table reloads, the minimum latency that we were able to support was 2 minutes post-event. With Databricks, we were able to reduce our response time to 20 seconds, a 91% performance improvement. We were also able to reduce other batch processes, such as updating user dimensional tables, by up to 80% by converting to Delta Lake file format and utilizing Z-Order indexing.

Productivity

The other significant benefit of this migration is the productivity gain experienced by our data teams. Before Databricks, our data science team spent 20-30% of their time maintaining our ML infrastructure and diving through logs to troubleshoot broken Airflow processes.

With Databricks, we were able to offload almost all of our orchestration work to the Workflows feature, improving stability. By leveraging MLflow, feature store, and AutoML, we can now rapidly iterate and optimize our model performance in a fully managed environment. Databricks-managed clusters also allow us to easily install and update new libraries for our experimentation clusters and even access GPU compute on an as-needed basis. These industry-standard capabilities being natively built into Databricks save each data scientist about 10 hours/week and an additional 10 hours/week by removing the infrastructure management component: enabling us to do more with less.

Bonus: Revenue

We can now respond to user events in near real-time at a significantly lower cost. These two factors, plus the efficiency gains, enable us to send 40% more messages via this delivery mechanism and result in a 5% increase in direct revenue. We have also seen a 5% increase in indirect revenue because our models are more effective and accurate.

Conclusion

Migrating these workloads from Snowflake to Databricks was a game-changer for WIMG. The transition to streaming has provided us with near-real-time response capabilities. This enables us to make better, data-driven decisions, which is core to the services we provide to our clients. The enormous cost savings we’ve experienced have also allowed us to invest more in our AI capabilities.

We are now evaluating the data warehouse capabilities within Databricks to make sure we are using the most cost-performant and effective tool for all of our data needs.

Databricks is now a key component of our core data infrastructure at WIMG and empowers us to run business-critical pipelines that directly benefit our organization and our customers.

To learn more about what WIMG can do for you click here.

About What If Media Group

Founded in 2012, What If Media Group is an award-winning performance marketing company that enables the world’s leading brands to acquire valuable new customers at scale. By leveraging data-driven engagement and re-engagement strategies across multiple proprietary marketing channels and sophisticated targeting technology, and utilizing insights based on millions of consumer ad interactions each day, What If Media Group delivers the most cost-effective and highest performing marketing campaigns for its clients.